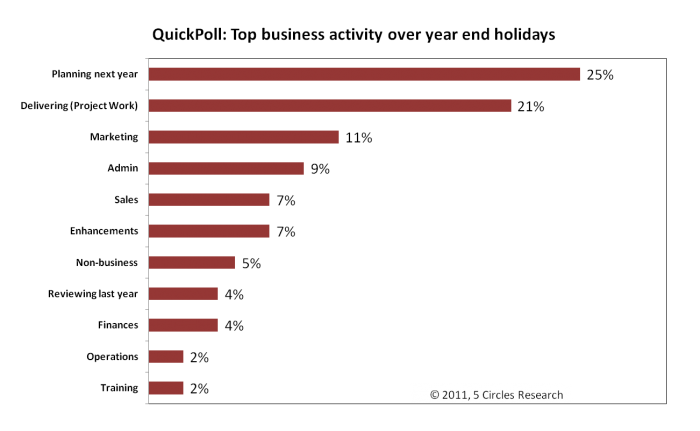

We asked entrepreneurs, consultants and small business owners how they were spending their time over the holiday period.

The question asked about the TOP activity, so people needed to prioritize. The most popular answers were “planning next year“, and “delivering to customers“, recognizing both looking forward and (presumably) the need to complete tasks. It would be interesting to see if planning is as popular at a time of the year when New Year isn’t a factor. Reviewing last year wasn’t as common as response. Perhaps people are doing continual reviews (I doubt it), or more likely they have recognized the need and the opportunity for bigger shifts and looking back isn’t as relevant.

An expert in collaborative strategy planning, Robert Nitschke of Arago Partners, tells me that many companies take until the end of Q1 to complete their strategic plan for the year. When will yours be done?

Idiosyncratically,

Mike Pritchard